Yang Nan, Nan Lin, Zhang Dingyi, Ku Tao. Research on image interpretation based on deep learning[J]. Infrared and Laser Engineering, 2018, 47(2): 203002-0203002(8). doi: 10.3788/IRLA201847.0203002

| Citation:

|

Yang Nan, Nan Lin, Zhang Dingyi, Ku Tao. Research on image interpretation based on deep learning[J]. Infrared and Laser Engineering, 2018, 47(2): 203002-0203002(8). doi: 10.3788/IRLA201847.0203002

|

Research on image interpretation based on deep learning

- 1.

Shenyang Institute of Automation,Chinese Academy of Sciences,Shenyang 110016,China;

- 2.

University of Chinese Academy of Sciences,Beijing 100049,China

- Received Date: 2017-08-05

- Rev Recd Date:

2017-10-11

- Publish Date:

2018-02-25

-

Abstract

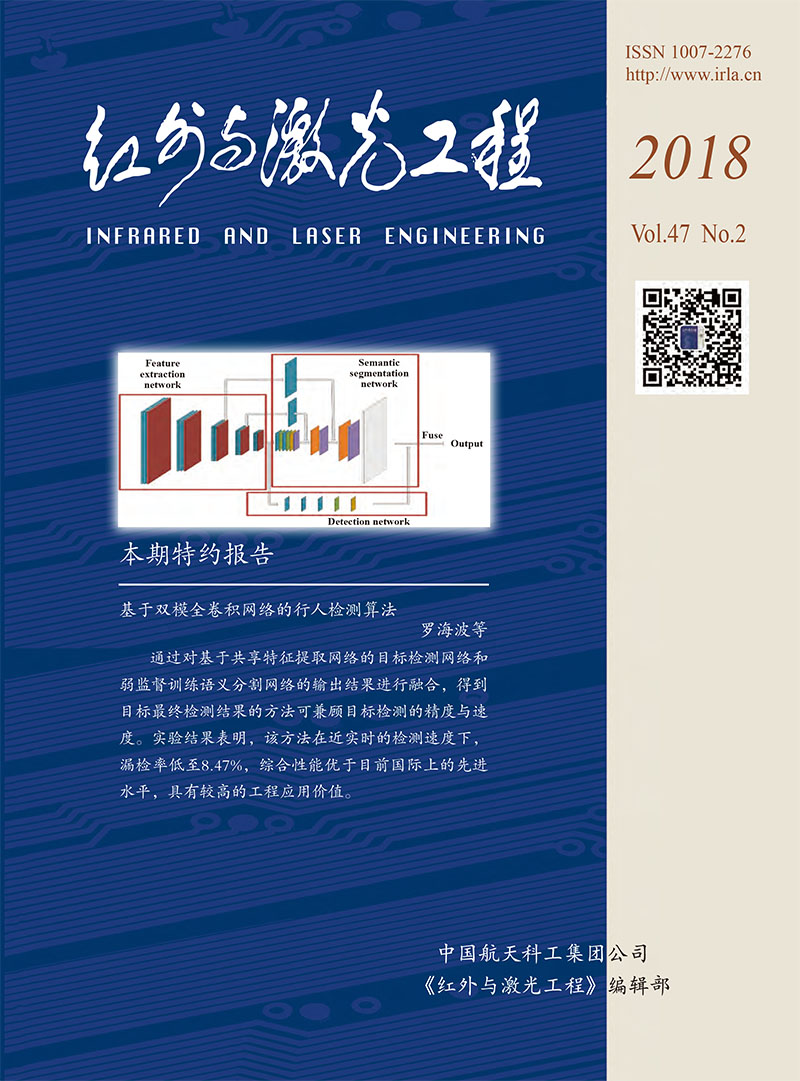

Convolution Neural Networks (CNN) and Recurrent Neural Networks (RNN) had developed rapidly in the fields of image classification, computer vision, natural language process, speech recognition, machine translation and semantic analysis, which caused researchers' close attention to computers' automatic generation of image interpretation. At present, the main problems in image description were sparse input text data, over-fitting of the model, difficult convergence of the model loss function, and so on. In this paper, NIC was used as a baseline model. For data sparseness, one-hot text in the baseline model was changed and word2vec was used to map the text. To prevent over-fitting, regular items were added to the model and Dropout technology was used. In order to make innovations in word order memory, the associative memory unit GRU for text generation was used. In experiment, the AdamOptimizer optimizer was used to update parameters iteratively. The experimental results show that the improved model parameters are reduced and the convergence speed is significantly faster, the loss function curves are smoother, the maximum loss is reduced to 2.91, and the model accuracy rate increases by nearly 15% compared with the NIC. Experiments validate that the use of word2vec to map text in the model obviously alleviates the data sparseness problem. Adding regular items and using Dropout technology could effectively prevent over-fitting of the model. The introduction of associative memory unit GRU could greatly reduce the model trained parameters and speed up the algorithm of convergence rate, improve the accuracy of the entire model.

-

References

|

[1]

|

Farhadi A, Hejrati M, Sadeghi M A, et al. Every picture tells a story generating sentences from images[J]. ECCV, 2010, 21(10):15-29. |

|

[2]

|

Xu Feng, Lu Jiangang, Sun Youxian. Application of neural network in image processing[J]. Chinese Journal of Information and Control, 2003, 4(1):344-351. (in Chinese)许锋, 卢建刚, 孙优贤. 神经网络在图像处理中的应用[J]. 信息与控制, 2003, 4(1):344-351. |

|

[3]

|

Kulkarni G, Premraj V, Dhar S, et al. Baby talk:Understanding and generating simple image descriptions[J]. CVPR, 2014, 35(12):1601-1608. |

|

[4]

|

Cho K, van Merrienboer B, Gulcehre C, et al. Learning phrase representations using RNN encoder-decoder for statistical machine translation[J]. EMNLP, 2014, 14(6):1078-1093. |

|

[5]

|

Vinyals O, Toshev A, Bengio S, et al. Show and tell:A neural image caption generator[C]//Proceeding of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2015:3156-3164. |

|

[6]

|

Alex Krizhevsky, Ⅱya Sutskever, Geoffrey Hinton. Imagenet classification with deep convolution neural networks[C]//Proceedings of Advances Neural Information Processing Systems(NLPS), 2012:1097-1105. |

|

[7]

|

Sermanet P, Eigen D, Zhang X, et al. Overfeat:Integrated recognition, localization and detection using convolutional networks[J]. Computer Vision and Pattern Recognition, 2013, arXiv preprint arXiv:1312.6229. |

|

[8]

|

Gerber R, Nagel H H. Knowledge representation for the generation of quantified natural language description of vehicle traffic in image sequence[C]//Proceeding of the IEEE International Conference on Image Processing, 1996:805-808. |

|

[9]

|

Yao B Z, Yang X, Lin L, et al. I2t:Image parsing to text description[C]//Proceedings of the IEEE, 2010, 98(8):1485-1508. |

|

[10]

|

Li S, Kulkarni G, Berg T L, et al. Composing simple image descriptions using web-scale n-grams[C]//Proceeding of the Conference on Computational Natural Language Learning, 2011. |

|

[11]

|

Aker A, Gaizauskas R. Generating image descriptions using dependency relational patterns[C]//Proceedings of the Meeting of the Association for Computational Linguistics (ACL), 2010:49(9):1250-1258. |

|

[12]

|

Hodosh M, Young P, Hockenmaier J. Framing image description as a ranking task:Data, models and evaluation metrics[C]//International Conference on Artificial Intelligence, 2013, 47(1):853-899. |

|

[13]

|

Wen Ya, Nan Lin. Research on semantic analysis method of image based on natural language understanding[D]. Shenyang:Shenyang Institute of Automation, Chinese Academy of Sciences, 2017. (in Chinese)温亚, 南琳. 面向自然语言理解的图像语义分析方法研究[D]. 沈阳:中国科学院沈阳自动化研究所, 2017. |

-

-

Proportional views

-

DownLoad:

DownLoad: