HTML

-

高分辨合成孔径雷达(Synthetic Aperture Radar,SAR)图像为高效、准确的情报解译提供了有力支撑。MSTAR数据集为SAR目标识别算法的研究提供了数据基础[1]。该数据集中的SAR图像分辨率达到0.3 m,可有效用于坦克、装甲车、加农炮等10类典型车辆目标的分类识别。随着近30年的研究发展,针对MSTAR数据集的SAR目标识别方法在性能上取得了长足进步。然而,这些研究成果也暴露了当前识别方法对于扩展操作条件的不足。SAR目标识别中的扩展操作条件可能由于目标、背景、传感器等因素的差异造成,其直接结果结束待识别的测试样本与已建立的训练样本存在较大的差异。为此,着力解决扩展操作下的难点问题是当前SAR目标识别方法的研究重点。

一般地,SAR目标识别方法通过寻找特征和分类器最佳的组合达到提高识别性能的目标。特征提取方面,参考文献[2-6]以区域、轮廓等描述目标几何形状并设计目标识别方法。灰度分布特性可通过数学变换或信号处理等手段进行描述。典型的投影变换算法有主成分分析(Principal Component Analysis,PCA)[7-8],非负矩阵分解(Non-negative Matrix Factorization,NMF)[9]等,实现高维SAR图像到低维特征矢量的变换。应用于SAR图像特征提取的信号处理算法包括小波分解[10]、单演信号[11]以及经验模态分解[12]等。这些算法主要是将原始SAR图像分解为多个层次的表示,从而获得目标更多的特性描述。电磁散射特征描述目标的后向散射特性,主要代表是散射中心[13-15]。参考文献[13-14]提出基于属性散射中心(Attributed Scattering center,ASC)匹配的SAR目标识别方法。分类阶段对测试样本提取特征的类别归属进行判定决策。对于形式规则、维度统一的投影变换特征,一般可直接通过传统的分类器进行分类识别,典型的包括K近邻(K-nearest Neighbor,KNN)[16],支持向量机(SupportVector Machine,SVM)[17-18],稀疏表示分类(Sparse Representation-based Classification,SRC)[8, 18-21]等。对于目标轮廓点、散射中心等排列不规则、数量不一致的特征,则需要针对性设计分类策略,如参考文献[13-15]设计的散射中心集的相似度等。此外,深度学习模型也在SAR目标识别中得到广泛应用,典型的是卷积神经网络(Convolutional Neural Network,CNN)[22-24]。深度学习模型直接基于原始图像进行训练和学习,回避了传统的手动特征提取过程。研究成果验证了深度学习模型对于SAR目标识别的有效性,但其对于训练样本的需求量很大。对于SAR目标识别中的扩展操作条件,相关的训练样本十分有限,这就导致了深度学习方法对于扩展操作条件的适应性较差。

文中提出一种SAR图像分块匹配的识别方法,其核心思想是对目标区域进行分块考察,通过对各个字块的对比分析更为可靠地判定目标类别。在扩展操作条件下,目标SAR图像多发生局部变化。对于同类样本之间,它们仍然存在较高的相似性。因此,通过观察和评估SAR图像的局部差异以及一致性可更为有效地克服扩展操作条件。为此,文中将待识别的SAR图像进行分块处理,以其中心为基础划分为4个面积相等的字块。每个子块可以体现目标在一个方向的局部分布特性。在各个子块上,文中采用单演信号分别进行特征提取并构造相应的特征矢量。基于单演信号分解得到的谱成分可有效反映目标的频谱特性以及局部分布。对于各个字块构造得到的特征矢量,通过SRC获得重构误差矢量。对于不通分块的结果,采用随机权值它们进行线性加权融合,进而统计多组权值下的统计特征。对于正确类别,较低重构误差的子块占多数,因此最终4个重构误差的均值较小、方差较小。反之,对于错误类别,其获得的4个重构误差均值相对较大并且可能出现较大的方差。文中据此设计决策变量,作为最终的分类依据。基于MSTAR数据集构设典型测试条件和场景对方法进行测试,结果表明其在标准操作条件和扩展操作条件下均具有显著的性能优势。

-

现有研究表明,SAR图像中的扩展操作条件多与目标的局部变化相关联。例如,在型号变化的情形下,目标仅是局部结构发生改变,体现在SAR图像中也是局部像素分布、几何结构等信息的变化。为此,充分考察SAR图像中目标的局部变化具有重要意义。传统SAR目标识别方法基于原始整体SAR图像进行特征提取与分类,往往会出现由于局部变化导致的全局特征变化,导致最终的全局特征匹配出现较大误差。为此,文中将原始SAR图像进行分块处理,然后在各个子块上对目标特性进行分别考察,最后基于各子块的结果综合分析得到更为可靠的分类结果。

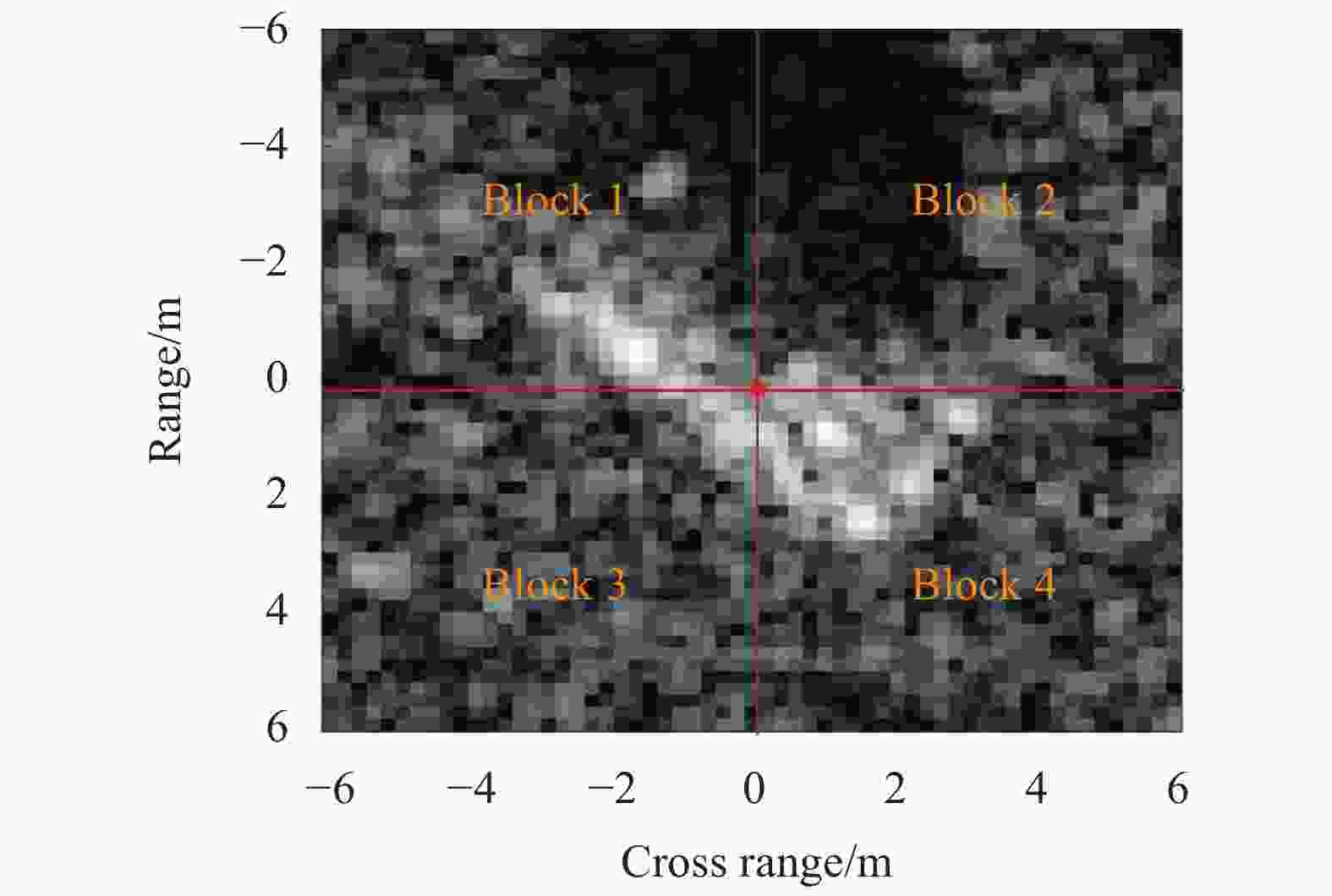

具体而言,文中使用的分块算法可分为两个主要步骤。首先,对原始图像进行中心化操作,即使得目标质心处于图像的中心位置,从而为所有的训练和测试样本构建参考基准。在此基础上,以图像中心为焦点,沿方位向和距离向进行分割,从而将原始图像四等分,获得4个子块图像。图1所示为MSTAR SAR图像的分块结果。具体使用中,各个子块独立处理,因此互不影响。因此,当某一子块出现局部变化时,其分类结果对于其他子块的影响很小。

-

根据参考文献[11],单演信号可用于SAR图像分解和特征提取。对于待分解的SAR图像

$f({\textit{z}})$ ,其中${\textit{z}} = {(x,y)^{\rm{T}}}$ 为坐标位置,其单演信号${f_M}({\textit{z}})$ 为:式中:

${f_R}({\textit{z}})$ 为输入图像的Riesz变换;$i$ 和$j$ 均为虚数单位。根据上式,分别定义三类单演信号特征:式中:

${f_x}({\textit{z}})$ 和${f_y}({\textit{z}})$ 分别为两个轴上单演信号的分量;$A({\textit{z}})$ 为幅度信息;$\varphi ({\textit{z}})$ 和$\theta ({\textit{z}})$ 分别为相位成分和方位信息。三类单演信号特征能够有效反映图像的多层次特征,包括局部幅度、相位以及方向特性。通过结合这三类特征可为图像分析提供更为充分的信息。为此,文中采用单演信号描述各个子块的图像特征,具体按照参考文献[11]的思路对分解得到的三类特征进行矢量化串接以及降采样获得低维度特征矢量。最终,基于单演信号特征对各个子块进行分类。

-

稀疏表示分类根据不同类别对未知样本进行线性拟合,通过拟合误差大小实施判决。现阶段,稀疏表示分类已经在SAR目标识别方法中得到广泛运用并得到验证[8, 18-21]。对于测试样本

$y$ ,其稀疏表示为:式中:

$D = [{D^1},{D^2}, \cdots ,{D^C}] \in {{\rm{R}}^{d \times N}}$ 表示C个训练类别构成的全局字典;$\alpha $ 为稀疏表示系数。在${\ell _0}$ 范数的约束下,$\alpha $ 呈现稀疏特性。根据稀疏表示系数的求解结果

$\hat \alpha $ ,分别计算不同类别的重构误差:式中:

${\hat \alpha _i}$ 表示第$i$ 类的稀疏矢量;$r(i)\;$ 则为第$i$ 类的重构误差。传统分类策略即根据最小误差的类别进行决策。对于与测试样本一致的训练类别,其对应的重构误差显著小于其他类别,因此可以据此进行类别判定。

-

文中采用SRC对原始SAR图像的4个子块分别进行分析处理,据此获得它们相应的重构误差矢量。对不同子块的结果,以线性加权为基本手段进行融合。但考虑到单一固定权值矢量的局限性,文中采用多组随机权值矢量进行处理,设计的权值矩阵如下:

其中,每一列对应一组随机权值矢量,满足约束:

在公式(6)的约束下,每一组权值矢量随机确定,共计得到N组随机权值矢量。每一组权值矢量的可能对于不同的子块赋予不同的权值,其最终的结果可以更为有效的获得融合结果,有效规避了传统确定权值可能带来的不稳定性。

记第

$i$ 类对第$k$ $(k = 1,2, \cdots ,4)$ 个子块的重构误差为$r_k^i$ ,随机加权融合描述为:以公式(7)为基础,采用公式(5)中的所有权值矢量重复操作,第

$i$ 类则有$N$ 个结果$R = \left[ {R_1^i\;R_2^i\; \cdots \;R_N^i} \right]$ ,记为融合误差矢量。若第

$i$ 类为实际类别,则各个子块相应的重构误差都较小。此时,在随机权值矢量下,融合误差矢量中各个元素的数值较小且变化较为平缓。反之,若当前测试样本并非来自第$i$ 类,则各个子块的重构误差相对较大,最终在随机权值下的融合误差矢量均值和反差都相对较大。因此,根据以上统计特征,定义决策变量为:式中:

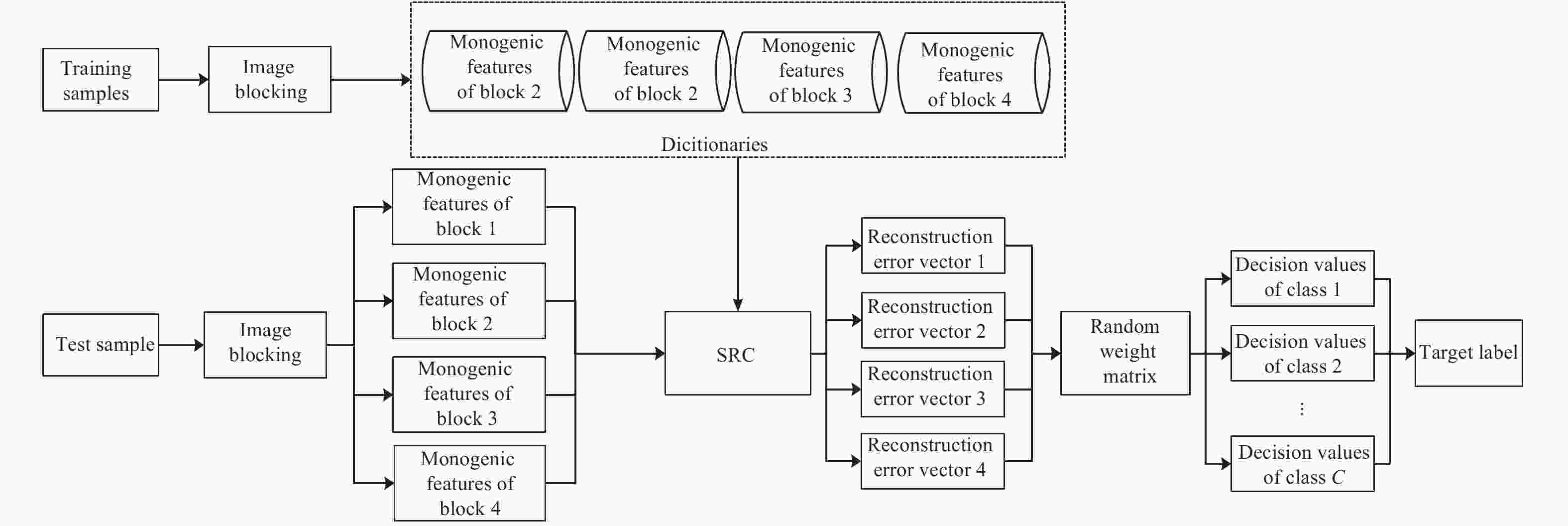

$m$ 和$\sigma $ 对应任一类别融合误差矢量的均值及方差;$\lambda $ 为大于零的调节参数。按照公式(8)可分别计算各个类别对应的决策变量${J_1},{J_2}, \cdots ,{J_C}$ ,具有最小值的类别即被判断为测试样本真实目标类别。图2显示了提出方法的基本实施流程。采用图像分块算法对所有训练样本进行处理,并对每个子块进行单演特征矢量提取。在此基础上,形成各个子块的字典。对于测试样本,相应进行分块操作并获得相应的单演特征矢量。基于SRC计算重构误差并进行随机加权融合处理,最终根据不同类别的决策变量获得目标所属类别。

3.1. 稀疏表示分类

3.2. 随机权值矩阵

-

MSTAR数据集由美国DARPA和AFRL发布,包括图3中的10类目标,图像分辨率0.3 m,可据此设置场景对方法进行分析。共设置5类对比方法,包括参考文献[2]基于Zernike矩的方法;参考文献[11]中采用单演信号的方法(记为Mono);参考文献[13]中基于属性散射中心匹配的方法(记为ASC Matching);参考文献[22]中的全卷积神经网络(A-ConvNet,记为CNN1)方法以及参考文献[24]中设计的平移、旋转不变网络(记为CNN2)。前三类对比方法侧重于特征提取,通过不同类型的特征提高SAR目标识别性能;后两种对比方法则是档期最为流行的深度学习方法。

-

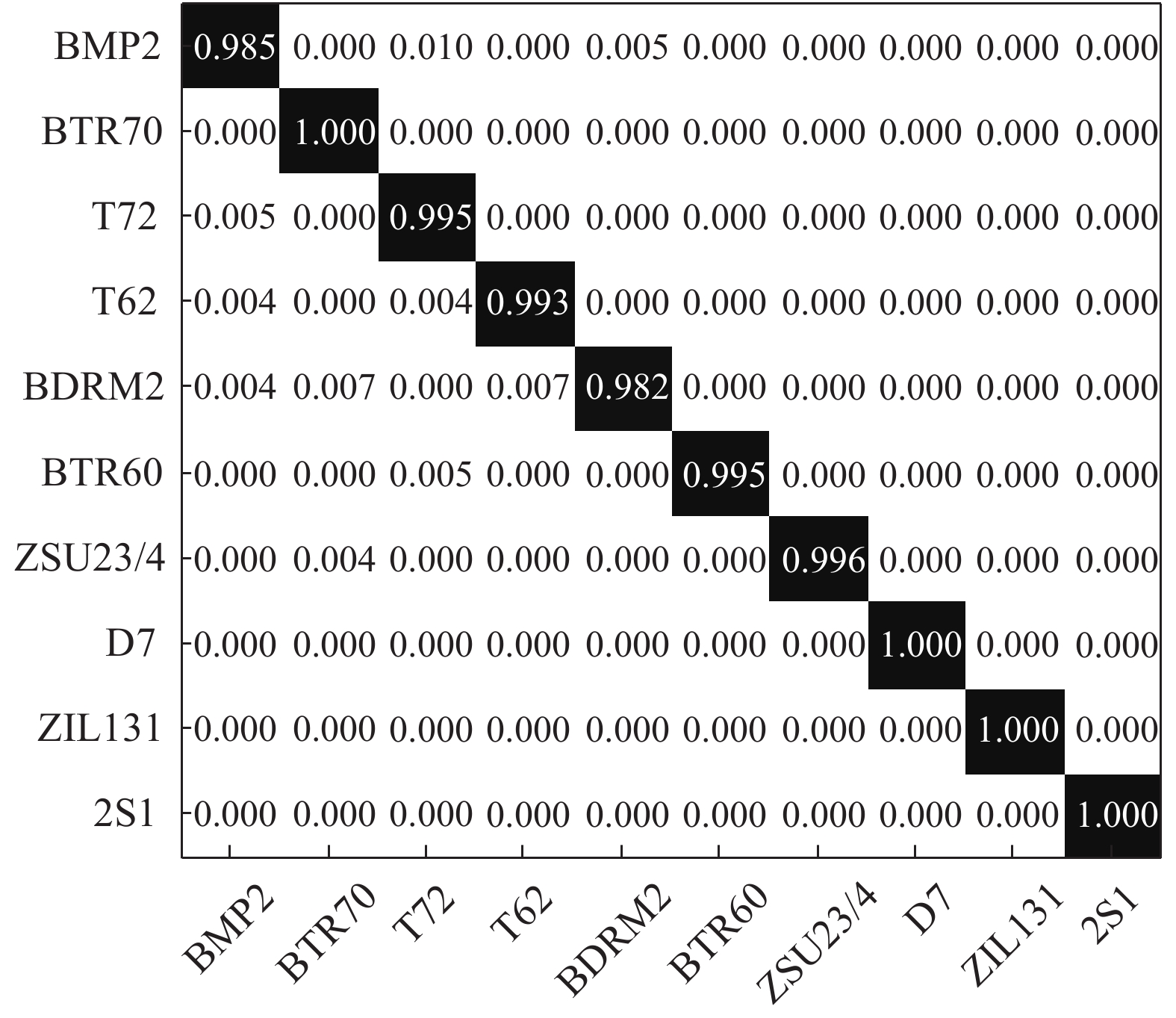

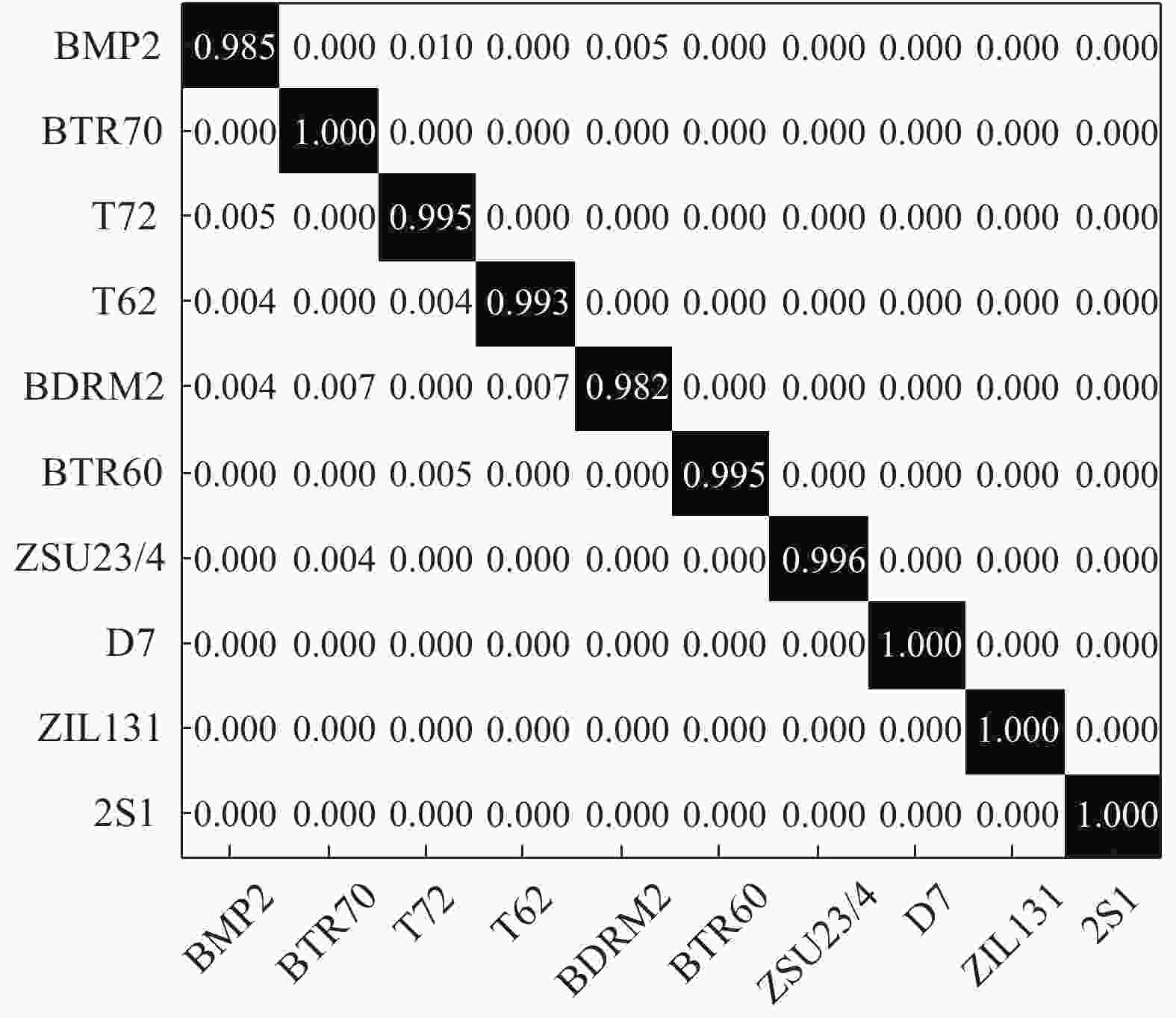

测试条件1为标准操作条件,整体难度相对较小。表1为测试条件1的相关设置,包括了图3所有目标。训练和测试样本分别为17°和15°下的SAR图像集。测试样本和训练样本来自相同的目标及型号。图4显示了文中方法在标准操作条件下的分类混淆矩阵。10类目标对应的正确识别率对应于对角线元素,均高于98.5%。定义平均识别率Pav为正确识别样本数占总样本数的比例,统计10类目标的Pav=99.32%,充分显示了提出方法的有效性。表2对比各类方法在标准操作条件下的识别性能。各类方法在标准操作条件下的平均识别率均高于98%,也反映了标准操作条件下的识别问题相对简单。所提方法在当前条件下可以取得最高的识别率,显示了方法的优势。

Type Training Test Depression Scale Depression Scale BMP2 17° 232 15° 193 BTR70 232 194 T72 231 194 T62 297 271 BRDM2 296 272 BTR60 255 193 ZSU23/4 297 272 D7 297 273 ZIL131 297 272 2S1 297 271 Table 1. Relevant setup for test condition 1

Method Pav Proposed 99.32% Zernike 98.12% Mono 98.64% ASC Matching 98.32% CNN1 99.08% CNN2 99.12% Table 2. Results under test condition 1

-

由于实际场景的复杂性,目标自身、背景环境以及传感器等要素都可能发生变化,因此扩展操作条件更为常见。后续设置3类典型扩展操作条件进行测试。

(1)测试条件2

测试条件2为型号差异,表3为相关试验数据和设置。其中,BMP2和T72采用不同型号的样本分别用于训练和分类。表4列出了不同方法在此时的识别率。对比测试条件1,各方法受到型号差异影响,性能出现下降。对于基于深度洗的CNN1和CNN2方法,由于存在的型号差异,最终平均识别率下降最为显著。文中方法对测试SAR图像进行分块处理,并且分区进行局部分析,因此有利于充分考察由于型号差异带来的局部图像变化。从识别结果上可以看出,文中方法的识别率更高,表明其对于型号差异的稳健性。

Type Training Test Depression/(°) Configuration Scale Depression/(°) Configuration Scale BMP2 17 9 563 233 15 9 566 196 c21 196 BTR70 17 c71 233 15 c71 196 T72 17 132 232 15 812 195 s7 191 Table 3. Relevant setup for test condition 2

Method Pav Proposed 98.46% Zernike 96.82% Mono 97.82% ASC Matching 98.02% CNN1 96.54% CNN2 97.02% Table 4. Results under test condition 2

(2)测试条件3

测试条件3为俯仰角差异,表5给出了相应的训练和测试集。采用17°俯仰角样本对算法进行训练,分别采用30°和45°俯仰角作为测试样本,考察不同俯仰角差异条件下的影响。表6显示不同方法的结果。在30°时,俯仰角差异造成的影响相对不大,各方法仍能保持94%以上的识别率。但在45°时,俯仰角差异带来的影响十分显著,各方法平均识别率大幅度降低,说明此时俯仰角差异带来了较大的SAR图像差异。与型号差异的情形类似,CNN1和CNN2方法的性能下降最为显著。文中方法通过图像分块匹配以及多权值的融合以及统计分析,可以更好地分析目标的局部变化,进而通过统计分析获得可靠的决策结果。

Type Training Test Depression Scale Depression/(°) Scale 2S1 17° 288 30 285 45 302 BDRM2 289 30 284 45 302 ZSU23/4 289 30 287 45 302 Table 5. Relevant setup for test condition 3

Method Pav 30° 45° Proposed 97.12% 73.63% Zernike 94.82% 68.24% Mono 96.35% 70.92% ASC Matching 96.72% 71.36% CNN1 95.82% 66.74% CNN2 96.24% 67.56% Table 6. Results under test condition 3

-

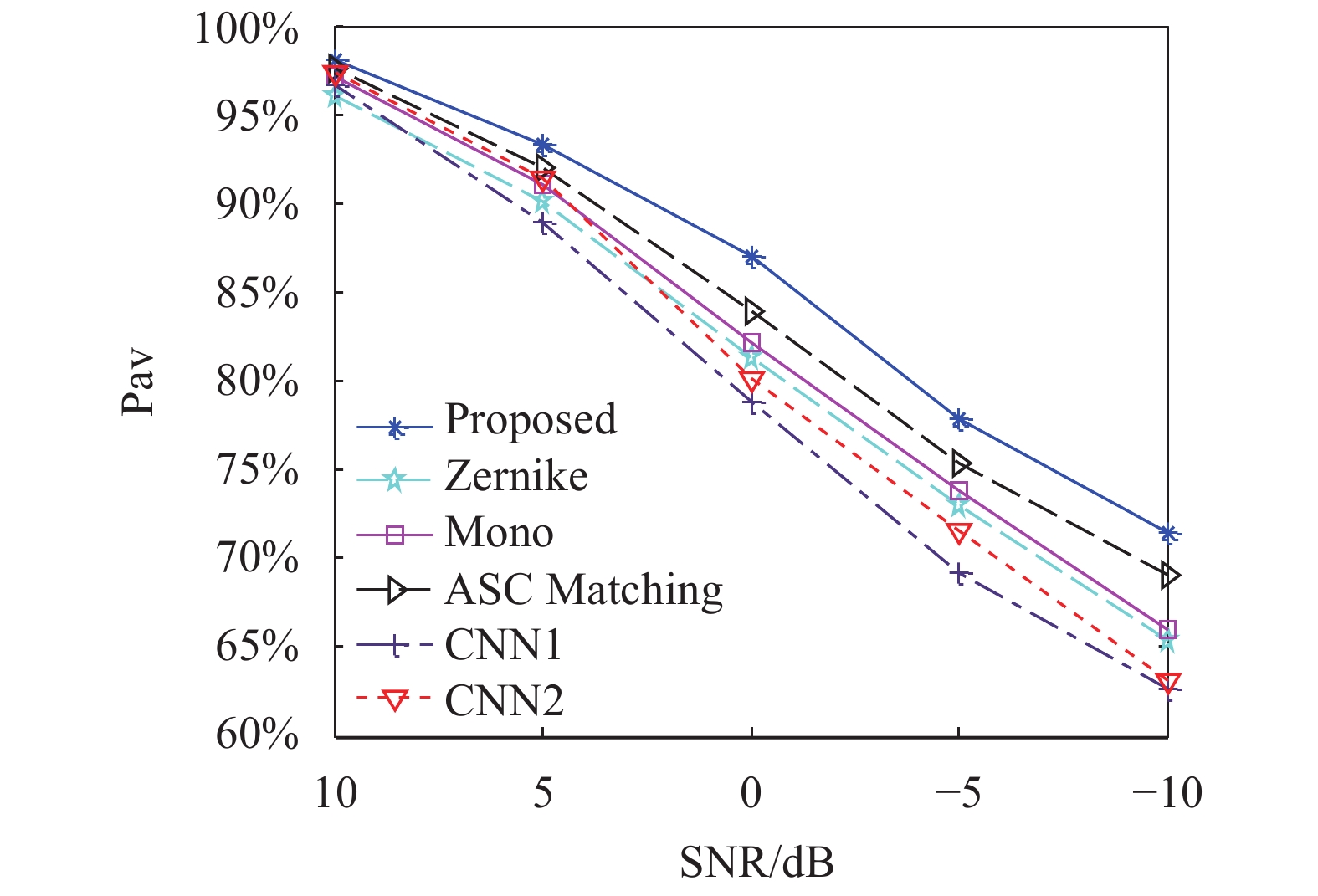

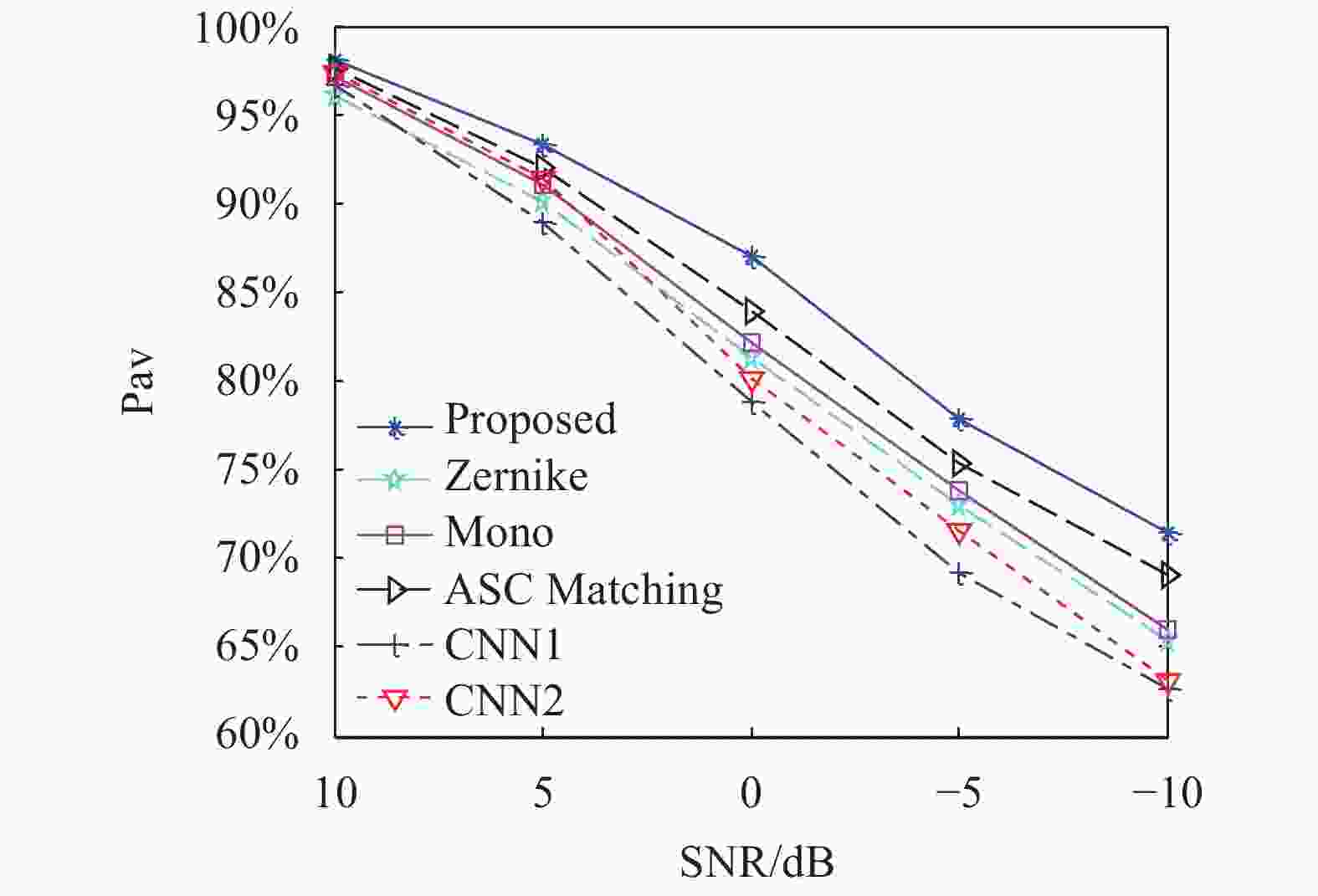

测试条件4为噪声干扰。当测试样本处于较低的信噪比(SNR)时,其与高信噪比的测试样本会出现较大的差异,导致识别问题难度显著增加。原始MSTAR测试样本于训练样本来自相近的信噪比,不能直接用于测试SAR目标识别方法的噪声稳健性。为此,文中首先通过噪声生成的方式获得含噪测试集,验证方法的噪声为减小。图5显示不同方法的结果,所提方法随着噪声加权可以保持更为稳定的性能。随着测试样本的信噪比降低,基于深度学习的CNN1和CNN2方法性能下降较为显著。散射中心匹配方法在噪声干扰条件下性能相对稳健,主要是属性散射中心提取过程中有效剔除了噪声的印象。图5所示结果充分验证了文中方法在噪声干扰下的性能优势。

4.1. 实验设置

4.2. 结果与讨论

4.2.1. 测试条件1

4.2.2. 扩展操作条件

4.2.3. 测试条件4

-

论文提出基于分块匹配的SAR图像目标识别方法。对原始SAR图像进行4分块处理,各个子块体现不同方向的局部特性。采用单演信号描述各个子块的频谱特性以及局部特征并构造特征矢量。对4个子块的单演特征矢量分别采用SRC进行分类进而获得重构误差矢量。基于随机权值矩阵对4个子块的重构误差矢量进行加权融合。通过对多组权值矢量下的融合结果进行统计分析设计决策变量,获得样本类别。实验在MSTAR数据集设置4种测试条件,包括标准操作条件和扩展操作条件。实验结果表明文中方法相比现有方法具有较为显著的性能优势。

DownLoad:

DownLoad: