-

合成孔径雷达(Synthetic Aperture Radar,SAR)通过微波成像实现对地表焦点区域的观测侦察。目标识别技术通过在大场景SAR图像定位目标并进行特征分析,获取其所属类别[1]。目标识别算法一般采用特征提取和分类器对测试样本进行分析处理。常用特征通常可区分为以下三类。类别一为几何形状特征,用于描述SAR目标的外形、结构等信息,如轮廓、尺寸、区域[2-4]等。电磁散射特征包括散射中心、极化方式[5-7]等,反映目标的散射机理。变换域特征通过数学投影、域间变换等手段获取稳定特征[8-12],如主成分分析(Principal Component Analysis,PCA)[8]、单演信号[10-11]、模态分解[12]等。常用的分类器包括最近邻分类器[8],通过最小距离或最大相似度准则进行决策;支持向量机(Support Vector Machine,SVM)[13-14],通过大量训练样本获取超参数空间的决策面;稀疏表示分类(Sparse Representation-based Classification,SRC)[15-17],通过稀疏重构比较表示误差进行决策等。近年来,深度学习技术发展迅速,基于卷积神经网络(Convolutional Neural Network,CNN)的图像处理技术获得长足进步。其中,基于CNN的SAR目标识别方法也不断得到丰富和验证[18-21]。然而,当训练样本数量少、覆盖条件不足的情况下,CNN的分类性能一般会出现较为显著的下降。这就导致了CNN对于SAR目标识别中的扩展操作条件(Extended Operating Condition,EOC)的适应能力仍然较差。为此,文中提出基于CNN和SRC决策融合的SAR目标识别方法。对于测试样本,首选采用设计的CNN对其进行识别,并输出其属于各个训练类别的后验概率。通过对概率矢量进行分析,计算分类置信度,用于判断当前决策(即测试样本类别分类为具有最大后验概率的训练类别)是否可靠。若决策可靠,则直接输出CNN的识别结果。反之,则选取若干具有较大后验概率的训练类别构建字典,进一步利用SRC对测试样本进行分类。最后,基于Bayesian理论对CNN和SRC的决策值进行融合并基于融合后的结果判定测试样本的目标类别。实验中,采用MSTAR数据集对方法进行测试分析,共设置了4种实验条件,既包含简单场景也包含相对复杂的场景。通过有现有几类SAR目标识别方法进行全面对比,实验结果有效验证了方法的优势性能。

-

CNN是深度学习技术在二维信号(图像)邻域的扩展,为图像处理与解译提供了新的可靠工具。CNN一般由卷积层(Convolution layer)、池化层(Pooling layer)以及全连接层(Fully-connected layer)组成,通过卷积层中的卷积操作实现对原始输入图像的分析,获取多层次的特征图。卷积层是CNN的核心,通过学习不同的卷积核实现对输入图像不同类型的特征提取。卷积层中,将上一层的特征图与该层的卷积核进行卷积操作并加上偏置项。然后,通过一个非线性激活函数输出卷积层的最终特征图。具体卷积操作为:

式中:

${x_{l - 1}}$ 为上一层的特征图;${\textit{z}}$ 表示卷积操作后直接输出的特征图输出;${x_l}$ 为经过非线性激活函数获得的最终特征图;${k_i}$ 为卷积核;${b_i}$ 表示偏置项目;“*”代表卷积操作;$f(\cdot)$ 为非线性激活函数,通常选用ReLU函数。实际过程中,通常在卷积层后设置池化层从而减小网络的计算复杂度以及提供稳健性。常用的池化操作包括平均池化和最大值池化。例如,最大值池化(max pooling)的计算方式如下:

根据公式(2),池化操作在特征图上通过

$h \times w$ 的滑动窗口进行数据处理从而去除原始特征图中的冗余信息通过提高网络的整体稳健性。在CNN的最后阶段,为了实现类别分类,通常采用一个多类分类器(通常为Softmax)实现目标类别的判定。Softmax是一种典型的概率分类器,可计算当前输入样本属于不同类别的后验概率,进而根据最大概率的原则对其类别做出判断。现阶段,基于CNN的SAR目标识别方法得到了较为广泛的研究,出现了多种新的网络结构[18-21]。文中在先前研究的基础上结合自身在网络设计和测试中的经验设置如表1所描述的CNN结构。该网络包括5个卷积层,4个最大值池化层;每个卷积层的输出均采用ReLU作为激活函数然后进行最大值池化操作。网络的末端,采用Softmax作为分类器,输出类别标签矢量(其中N代表参与分类的类别数目),从而判定目标类别。与网络具体结构对应,通过对原始图像进行裁剪或补齐等获得88×88维度的图像矩阵作为网络输入。

Layer Convolution/Pooling kernel Size of feature map Input — 88×88×1 Convolution 1 5×5×20 84×84×20 Pooling 1 2×2×20 42×42×20 Convolution 2 5×5×40 38×38×40 Pooling 2 2×2×40 19×19×40 Convolution 3 4×4×80 16×16×80 Pooling 3 2×2×80 8×8×80 Convolution 4 3×3×160 6×6×160 Pooling 4 2×2×160 3×3×160 Convolution 5 3×3×N 1×1×N softmax — N Table 1. Descriptions of different layers in CNN

-

CNN可通过Softmax分类器获得当前测试样本属于各个训练类别的后验概率。一般的,可根据最大概率的原则判定测试样本的目标类别。然而,当最大概率值可能与其他概率值十分接近时,决策可靠性并不高。假设

$C$ 个训练类别对应的后验概率分别为$\left[ {{P_1},{P_2}, \cdots ,{P_C}} \right]$ ,文中定义CNN决策可靠性为:式中:

${P_K}$ 为最大概率值;置信度系数$R$ 定义为最大概率与次大概率之比,故有$R > 1$ ,$R$ 越大表明基于最大后验获得CNN决策越可靠。通过对置信度系数设置合理的门限,可以对CNN的输出决策进行有效筛选。当置信度高于门限时,认定当前决策可靠,可直接基于CNN完成测试样本的目标识别。反之,CNN的识别结果可靠性不强,则需要进一步对测试样本进行分析,得到更可靠的识别结果。文中正是出于这样的考虑,对CNN的判决结果进行可靠性判断。当CNN能够给出可靠结果时,直接采用CNN的决策作为最终结果。反之,基于CNN输出的后验概率值选取若干训练类别构建字典,然后采用SRC对测试样本进行进一步的分类以及与CNN结果的关联融合,使得最终的识别结果更为精确。

-

SRC通过稀疏线性表示的方法对测试样本进行重构并比较各个训练类别的重构误差[15-17]。集各训练类别构建的字典为

$A = [{A^1},{A^2}, \cdots ,{A^C}] \in {{\rm{R}}^{d \times N}}$ ,其中${A^i} \in {R^{d \times {N_i}}}(i = 1,2, \cdots ,C)$ 代表第$i$ 类中的训练样本。测试样本$y$ 的稀疏重构过程如下:式中:

$\alpha $ 为待求解的系数矢量;$\varepsilon $ 为重构误差。现阶段,可采用${\ell _{\rm{1}}}$ 最小化、正交匹配追踪算法(OMP)、贝叶斯压缩感知等算法对公式(4)进行求解。根据解得的系数矢量

$\hat \alpha $ ,分别计算各个类别对于测试样本的重构误差进而判定其类别,表示为:早期研究结果表明,相比最近邻、SVM等经典分类器,SRC早噪声干扰、局部遮挡等非理想条件可以保持更为稳健的分类性能。针对CNN应对扩展操作条件不足的风险,采用SRC进行进一步的决策有利于更为准确地判定未知测试样本的类别,提高目标识别精度。

-

当CNN的决策判定为不可靠,采用SRC对测试样本进行进一步决策。然而,考虑到CNN的分类结果仍然可以为正确决策提供有效信息,文中基于Bayesian理论对CNN和SRC的分类结果进行融合[22]。记筛选后的

$M$ 个训练类别分别为$\left\{ {{\Omega _1},{\Omega _2}, \cdots ,{\Omega _M}} \right\}$ ;CNN和SRC对应的决策事件分别为${\varLambda _1}$ 和${\varLambda _2}$ ,它们对应的概率结果如下:式中:

$p_i^j(i = 1,2, \cdots ,M;j = 1,2)$ 代表CNN和SRC对应于类别${\Omega _i}$ 的概率。认为CNN和SRC的决策相互独立,计算它们的联合概率如下:此时,根据最大概率原则判定测试样本的目标类别如下:

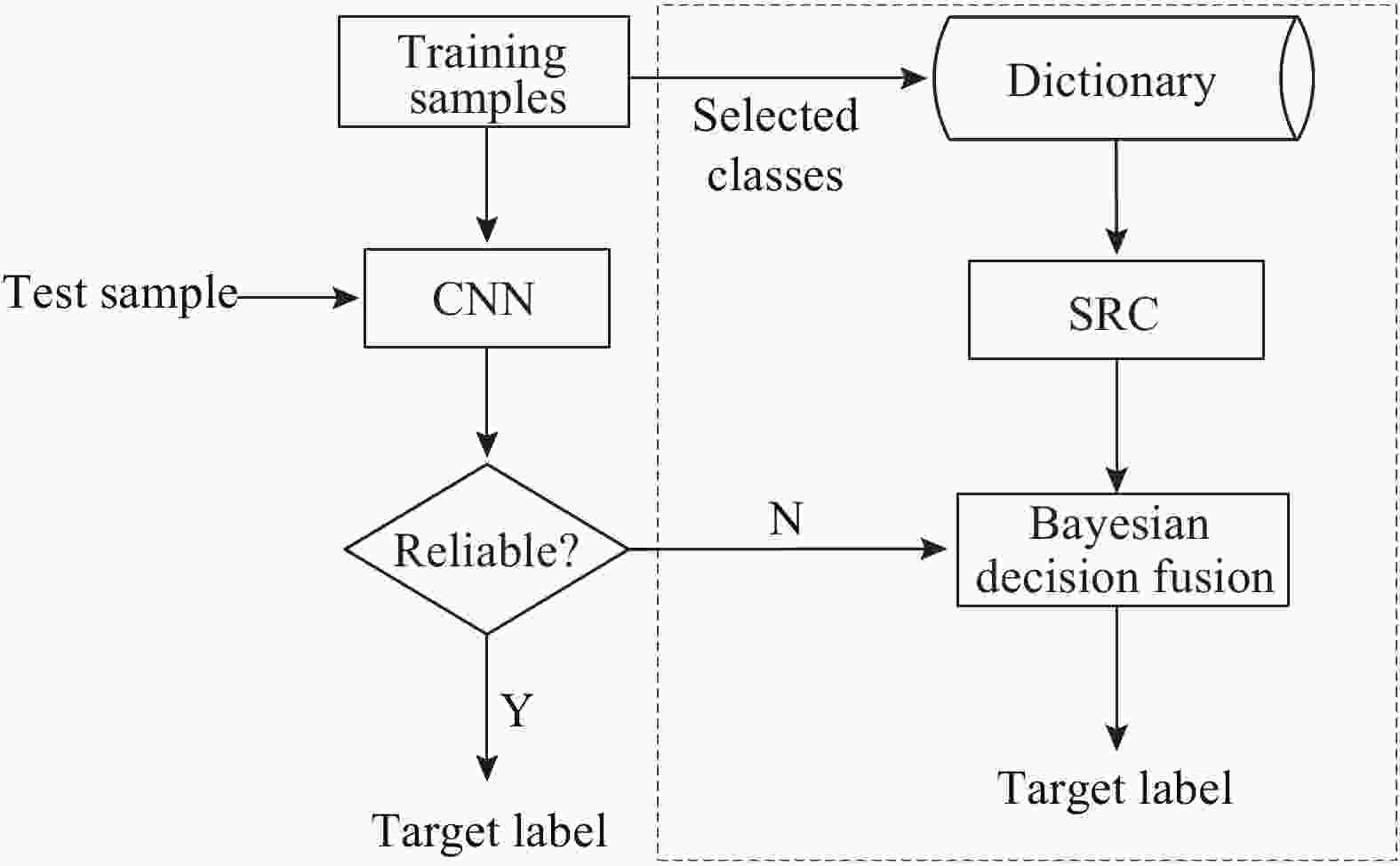

文中对于CNN和SRC输出的关于候选类别的决策变量进行Bayesian决策融合。在CNN无法获得可靠识别结果的情况下基于融合后的决策值判定测试样本的目标类别。图1所示为文中方法的基本流程。测试样本首先在CNN的分类下获得其属于各个训练类别的后验概率并基于最大概率原则获得初始判决类别。然后,按照1.2节中的方法计算当前决策的可信度。若可信度高于设定的门限

${T_1}$ ,则认为当前决策可靠直接输出目标类别,识别流程结束。反之,若当前决策判定不可靠,则选取后验概率高于门限${T_2}$ 的训练类别构建字典,对当前测试样本进行SRC分类。最终,基于Bayesian理论对SRC和CNN的决策结果进行融合获得目标类别。 -

为对文中方法性能进行实验验证,基于MSTAR数据集设置条件进行测试。MSTAR数据集包含了BMP2、BTR70、T72、T62、BRDM2、BTR60、ZSU23/4、D7、ZIL131、2S1共十类地面目标的SAR图像。针对每一类目标,其SAR图像覆盖0°~360°方位角(间隔1°~2°)以及15°、17°、30°和45°等典型俯仰角。其中,BMP2和T72等目标还包含多个子型号。

针对文中方法,根据多次预先测试,选用门限

${T_1}{\rm{ = }}1.2$ ,${T_2}{\rm{ = }}0.25$ 分别进行CNN的决策可靠性判决以及候选类别的筛选。采用几类现有方法同时进行对比测试,包括基于SVM的方法[12]、基于SRC的方法[13]以及基于CNN的方法[15]。其中,SVM和SRC与文中方法一样采用PCA进行特征提取。 -

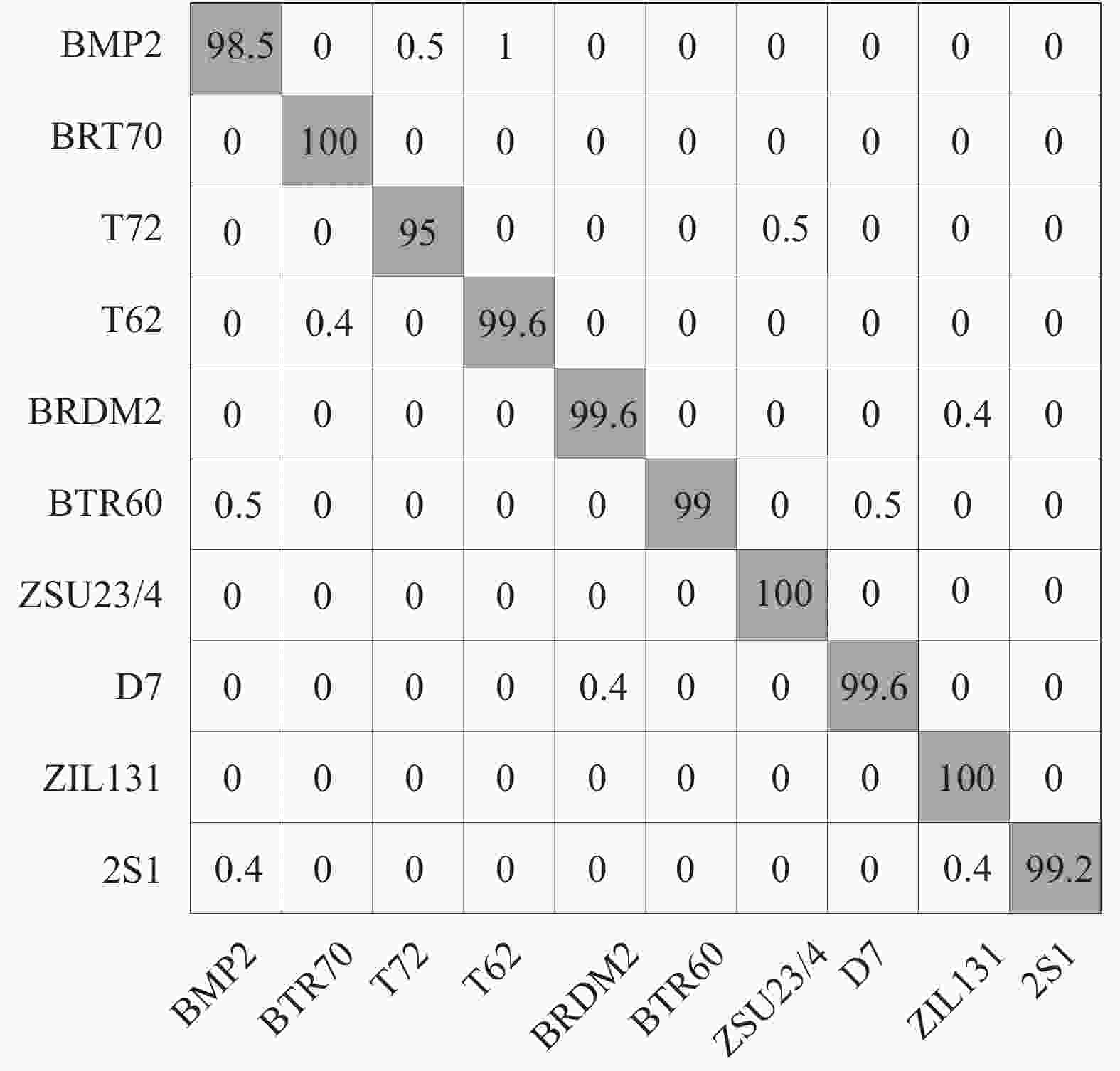

当测试样本与建立的训练样本库相似度较高时,此时的识别条件称为标准操作条件(Standard Operating Condition,SOC),为文中开展的实验1。表2给出了该实验设置的标准操作条件,10类目标的测试与训练样本仅存在2°的俯仰角差异。并且,训练样本的数量多于测试样本,可以较好地覆盖测试样本中的各类情形。图2所示为文中方法的分类混淆矩阵,横纵坐标分别表示样本实际类别和分类结果,对角线元素则是不同目标的正确识别精度。可以看出,各类目标的分类精度均在99%左右,部分类别以100%的概率正确识别。表3列出了所有方法在实验1条件下的平均识别率,文中方法取得了最高的99.36%。由于训练样本对测试样本的描述能力较强,CNN方法的独立识别率便可以达到99.08%。该方法采用SRC对CNN中少量难以可靠识别的样本进行进一步分类以及决策融合,进一步提高了最终的识别性能。

Class Training Testing Elevation angle/(°) Sample amount Elevation angle/(°) Sample amount BMP2 17 214 15 174 BTR70 214 175 T72 213 175 T62 278 256 BRDM2 277 257 BTR60 234 174 ZSU23/4 278 249 D7 278 249 ZIL131 278 249 2S1 278 249 Table 2. Training and testing samples under experiment 1: Including 10-class targets

Recognition method Ours SVM SRC CNN Average recognition rate 99.36% 98.64% 98.23% 99.08% Table 3. Average recognition rates under experiment 1

-

通过对一类目标部分结构、配置进行调整,可以针对性获得该目标的多个子型号,用于不同的用途。MSTAR数据库中,BMP2和T72两类目标就存在多个子型号,可为验证识别方法对于型号差异的稳健性提供支撑,为文中的实验2。该实验的测试和训练集如表4所示,两类目标的测试样本与训练样本来自完全不同的型号。此外,训练和测试样本的俯仰角差异仍然较小,此时对于识别问题存在较大的影响因素就是型号差异。表5对比了各类方法在实验2条件下的分类精度。相比标准操作条件,各方法的平均识别率均出现了小幅度下降。文中方法以97.72%的识别率仍然优于其他各类方法。对于部分与训练样本差异较大的测试样本,CNN的分类能力有限,所以其识别率下降最为显著。文中方法SRC可针对CNN难以可靠分类的样本进行进一步的确认,并最终通过与CNN的决策融合提高分类置信度。因此,在型号差异的条件下,通过综合CNN和SRC进一步提升了整体的识别稳健性。

Class Training Testing Elevation angle/(°) Configuration Sample amount Elevation angle/(°) Configuration Sample amount BMP2 17 9 563 214 15 9 566 175 c21 175 BTR70 c71 214 c71 175 T72 132 213 812 174 s7 167 Table 4. Training and testing samples under experiment 2: Including 3-class targets

Recognition method Ours SVM SRC CNN Average recognition rate 95.42% 92.58% 92.14% 93.96% Table 5. Average recognition rates under experiment 2

-

在俯仰角差异较大的条件下,即便是同一目标、相同方位角下的两幅SAR图像也可能存在很大的外观区别。利用MSTAR数据集提供的多俯仰角样本,该实验设置测试与训练集如表6所示,用于测试方法在较大俯仰角差异条件下的性能,为文中的实验3。对于2S1、BTR70和BDRM2三类目标,测试样本分别采集自30°、45°两个不同俯仰角;训练样本只包含17°俯仰角的样本。此外,三类目标在当前设置条件下不存在型号等其他差异,因此影响识别问题的主要是俯仰角差异。表7显示了各类方法在当前两个测试俯仰角下的平均识别率。对比而言,45°俯仰角下各类方法的识别率均出现了十分显著的降低,主要是俯仰角差异带来的图像显著变化。文中方法在两个角度下均保持了最佳的性能,验证其对于俯仰角差异的稳健性。与型号差异的条件类似,SRC与CNN对于各种样本的识别具有互补性,通过科学融合有利于提升识别稳健性。

Class Training Testing Elevation angle/(°) Sample amount Elevation angle/(°) Sample amount 2S1 17 277 30 267 45 285 BRDM2 276 30 266 45 285 ZSU23/4 277 30 267 45 285 Table 6. Training and testing samples under experiment 3: Including 3-class targets

Recognition method Ours SVM SRC CNN Average recognition rate 30° 97.56% 94.52% 95.87% 97.04% 45° 71.64% 66.64% 65.42% 67.56% Table 7. Average recognition rates under experiment 3

-

对于SAR图像目标识别问题,感兴趣目标尤其是非合作目标的训练样本资源十分有限。这就要求设计的识别算法能够在少量训练样本的支持下仍然获得稳健的识别结果。以表1中的训练和测试样本为基准,随机抽取其中训练样本的一定比例用于训练,为文中的实验4。图3显示了在训练样本比例分别为80%、60%、40%和20%时各类方法的识别率曲线。文中方法在各个比例下均取得最高的平均识别率,验证其在有限训练样本下的识别稳健性。尽管CNN在少量训练样本下分类性能不佳,但经过其筛选得到的候选类别仍可以大概率保留测试样本的真实类别。此时,进一步通过SRC分类并与CNN进行决策融合可以得到更为精确的识别结果。

-

文中提出联合CNN和SRC决策的SAR目标识别方法。CNN首先对测试样本进行分类,在得到可靠决策的条件下,完成识别任务,输出识别结果。否则,则利用CNN的决策值筛选少量的候选目标构建字典,支持SRC对于测试样本的进一步分类。最终,基于Bayesian理论对CNN和SRC的结果进行决策融合,判定测试样本的目标类别。基于MSTAR数据库对文中方法进行了性能测试,结果表明其在标准操作条件、型号差异、俯仰角差异以及有限训练样本等条件下均可以保持优势性能。特别地,与单一采用CNN或SRC的方法相比,文中方法通过科学的融合处理显著提升了识别性能。这些结果充分验证了文中方法的有效性。

Decision fusion of CNN and SRC with application to SAR target recognition

doi: 10.3788/IRLA20210421

- Received Date: 2021-12-20

- Rev Recd Date: 2022-01-10

- Publish Date: 2022-04-07

-

Key words:

- synthetic aperture radar /

- target recognition /

- convolutional neural network /

- sparse representation-based classification /

- Bayesian fusion

Abstract: Synthetic aperture radar (SAR) target recognition method based on decision fusion of convolutional neural network (CNN) and sparse representation-based classification (SRC) was proposed. CNN learned the multi-level features of SAR images through the deep networks, and then judged the target category to which it belonged. Studies had shown that CNN could achieve good recognition performance with sufficient training samples. However, for the conditions which were not included in the training samples, the classification performance of CNN usually decreased significantly. Therefore, the test samples to be identified by CNN were used for classification, and then the reliability of the current classification results was calculated according to the output decision value (i.e. the posterior probability corresponding to each training category). When the classification result was judged to be reliable, the decision of CNN was directly adopted and the target category of the test sample was output. On the contrary, several candidate categories were screened according to the decision values output by CNN, and then a global dictionary was constructed based on their training samples for SRC. For the classification results of SRC, the Bayesian fusion algorithm was further used to fuse it with the classification results of CNN. Finally, the target category of the test sample was determined based on the fused result. The proposed method integrated the advantages of CNN and SRC through a hierarchical way, which was conducive to taking advantage of them for different test conditions and improving the robustness of recognition. In the experiment, tests and analysis were carried out based on the MSTAR dataset, and the results verified the effectiveness of the proposed method.

DownLoad:

DownLoad: